Teams invest significant effort in making their solution transparent, easy to troubleshoot and stable by instrumenting their product and technology which is then connected to their monitoring system (e.g. Prometheus as one of the popular solutions). The only question remains: Who monitors the monitoring? If the monitoring is down you won’t get any alarms about the malfunction of the product and you are at the begging. So you add monitoring of a monitoring system. Availability tough game starts. If both components have 95% availability you effectively achieve 90%. I believe that you got the rules of the game we play.

Dead man’s switch

How it is monitoring of monitoring setup in practice? Well, the implementation differs. But the core idea is the same and works well even in the army! It is called Dead man switch. It is based on the idea that if we are supposed to receive a signal for triggering an alarm in an unknown moment we need to guarantee that the signal can trigger an alarm anytime. Reversing the logic for triggering the alarm will give us that guarantee so having a signal which we receive constantly and the alarm is triggered when we don’t receive a signal. So simple! This principle (heartbeat) is used in multiple places e.g. clustering. In the army, they use it as well.

Prometheus dead man switch

Some monitoring tools they have this capability built-in but Prometheus doesn’t. So how to achieve this in order to sleep well that the watcher is watching.

Prometheus alert rule

We need to set up a rule that is constantly firing in Prometheus. There can be a rule like this:

- name: monitoring-dead-man

rules:

- alert: "Monitoring_dead_man"

expr: vector(1)

labels:

service: deadman

annotations:

summary: "Monitoring dead man switch should always fire alert"

description: "Monitoring dead man switch for probing alert path"

where service label will be used further in the next step (or similar alternative).

Alertmanager dead man switch Route

Now we need to create a heart beating. The rule on its own would fire once then it would be propagated to Prometheus Alert Manager (component responsible for managing alerts) and all would be over. We need a regular interval for our check-ins. Interval is given by availability you want to achieve as that all adds to reaction time you need. You can achieve this behaviour by special route for your alert in an Alert Manager:

- receiver: 'DEAD-MAN-SNITCH'

match:

service: deadman

repeat_interval: 5m

Dead man snitch Reciever

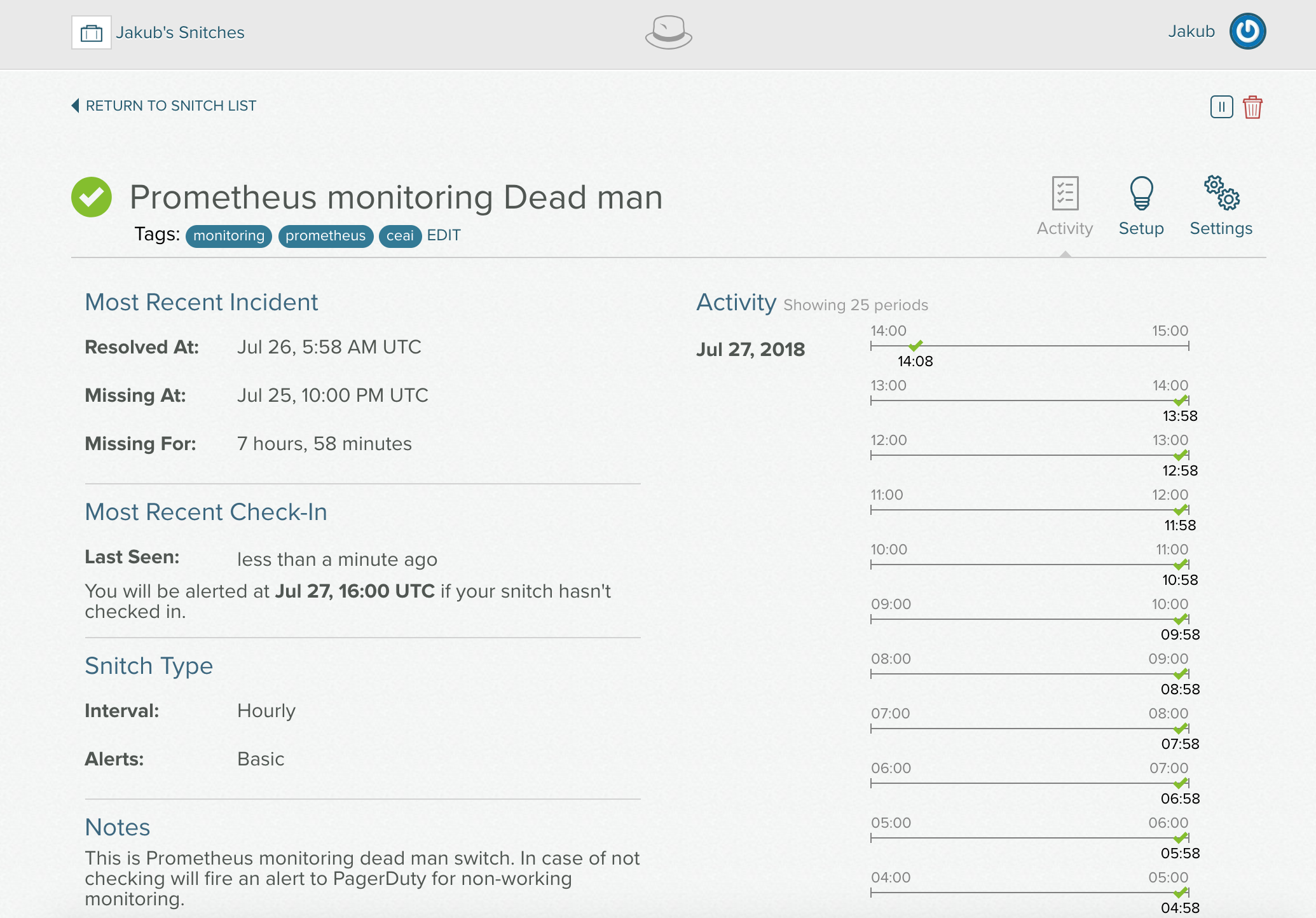

Now we need to achieve an alarm trigger reverse logic. In our particular case, we use Dead Man Snitch which is great for monitoring batch jobs e.g. data import to your database. It works in a way that if you do not check in in the specified interval it triggers with the lead time given by interval.

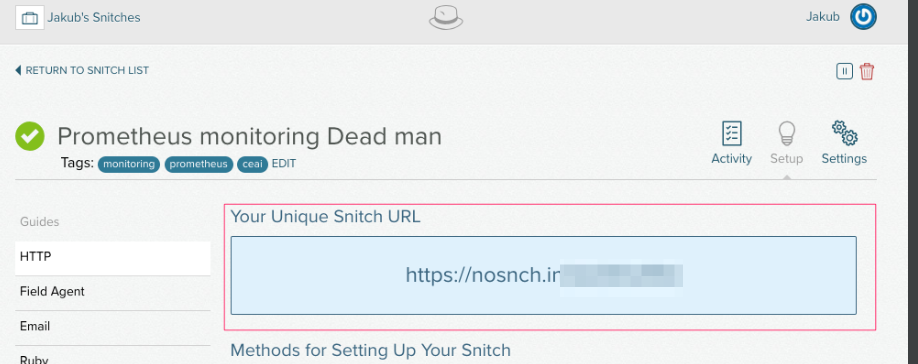

You can specify the rules when to trigger but those are the details of the service you use. All you need to add to Prometheus is a receiver definition checking in particular snitch as follows:

- name: 'DEAD-MAN-SNITCH'

webhook_configs:

- url: 'https://nosnch.in/your_snitch_id'

URL is taken for created snitch as an example here

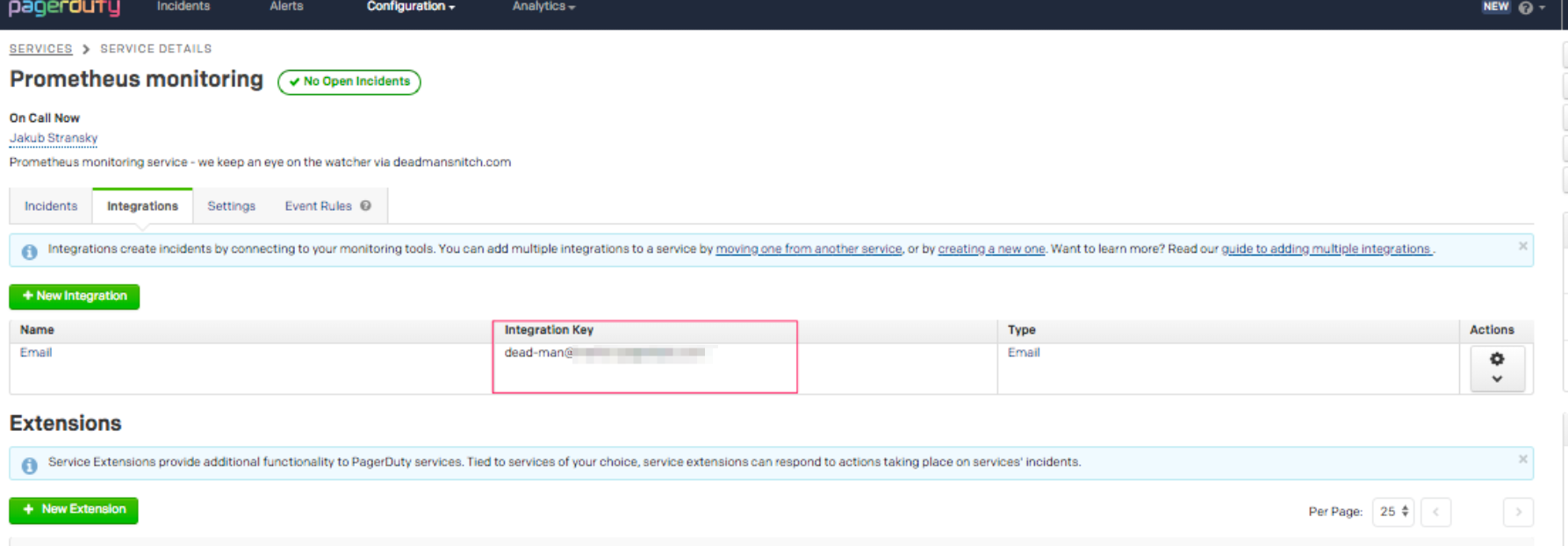

Pagerduty dead man’s switch

The last thing you need to do is integrate the trigger with the system you use for on-call rotta management e.g. PagerDuty . For this example integration between dead man snitch and pagerduty or you can integrate it via pager duty email trigger but that will be less reliable as it can change unnoticed.

Summary

Prometheus doesn’t have heartbeat service by default but we achived a desired functionality by using functionality of alert manager for resending alerts in combindation with 3rd party service which reverse the trigger logic. Let me know if is it working for you or whether you fund a better way how to achive the desired functionality. You can leave a comment below or you reach me on twitter.

Thanks for the share, and the example!

LikeLike

The chance of the monitor of the monitor failing at the same time in your example is actually (0.05*0.05), so the uptime would be 99.75%.

LikeLike

In the case of MTTF 5% for both services yes. Why do you consider 5%?

LikeLike

Great reading youur blog post

LikeLike